Will ChatGPT and AI Kill the Investment Banking Industry and Other “Knowledge Worker” Jobs?

Unless you’ve been hiding under a rock, you probably noticed the excitement, hype, and borderline panic when ChatGPT from OpenAI was released in November 2022.

If you haven’t yet created an account or tried it, you should – because it is quite impressive.

You can ask the tool almost any question, and it will generate text or computer code in response (with some restrictions).

The technology behind it is too complex to explain fully here, but it uses supervised/reinforcement learning and all the data on the Internet (as of 2021) to generate text based on statistical probabilities (Wikipedia has a good summary).

Some people have predicted that ChatGPT and similar artificial intelligence technologies will destroy all white-collar jobs, others have predicted massive changes but no job destruction, and others are still unconvinced by the technology.

This leads us to the possible impact on investment banking and other finance-related roles.

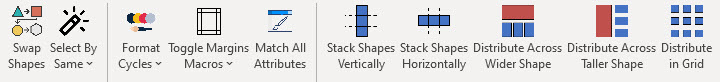

I’ve used ChatGPT over the past few weeks as I’ve been working on a macro package for a new version of our PowerPoint course (now available!).

I’ve also tested it for industry research and financial model outlines.

In some ways, it has been very impressive; but it has also wasted time and led me down rabbit holes.

So, I’ll take a “centrist” approach here and explain my findings and predict how it might affect recruiting, the job itself, and even education at all levels:

My Take on ChatGPT and Other AI Tools: The Short Version

If you ran this article through ChatGPT and asked it to write a summary, here’s what it might produce:

- Yes, it’s very significant, and it might make the same impact the Internet and mobile have made over the past few decades. But there are also many risks and ways it could go awry.

- AI will affect almost every industry that involves sitting at a computer and writing, designing, or coding; it may not “replace” humans, but it might limit job growth in certain areas.

- The more “client-facing” or “physical reality-facing” your job is, the less will change because ChatGPT cannot get drinks with potential clients or build a house.

- The more “precision” required by your work, the less you’ll be affected because AI tools are useful mostly in cases where 90% correct answers are fine. This is why human pilots still fly planes and why self-driving cars are… “more difficult” than many imagined (to be polite).

- Finance recruiting may shift away from automated tools like HireVue and online tests and move back toward in-person assessments or online tests where you need to share your screen with another human watching the whole time.

- AI will change the nature of the IB job to some extent, but its impact may be less than in fields like programming/marketing/design because of the job’s client-facing/emergency/meticulous nature.

- I predict many legal/regulatory/compliance battles as people argue about what is human vs. machine-written and whether AI tools can take their content from humans without citing their sources or referring people to the originals. Expect the amount of junk on the Internet to skyrocket.

- The real threat is the trajectory, i.e., the levels these tools might reach one day. For example, I don’t think any AI tool could write even a mediocre 1,000-page novel anytime soon. But a short story? A 10-page sales letter? An equity research report? Who knows?

I’ll expand on these points below, but I want to start with the results of my own testing:

My Results from Using ChatGPT for Finance and Coding-Related Questions

While I was working on various VBA macros in PowerPoint, I tried using ChatGPT to write and explain existing code.

It was useful to create a “rough draft,” but it kept making obvious errors that a human could detect in a second.

It functioned as a refined/faster version of Google search because I could type in natural questions and get the answers directly in text rather than poring through search results.

I found ChatGPT more useful for explaining code than for writing it.

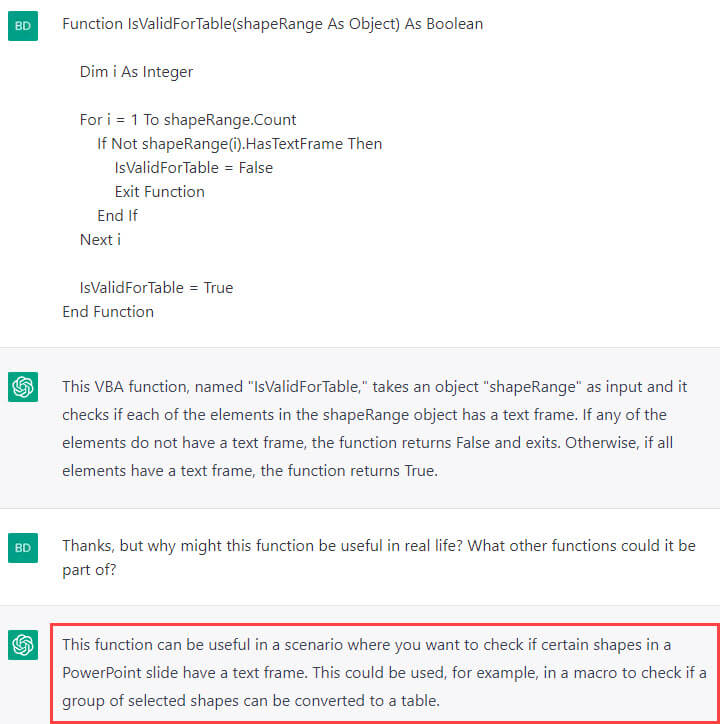

Let’s start with a quick example of simple VBA code that it explained fairly well:

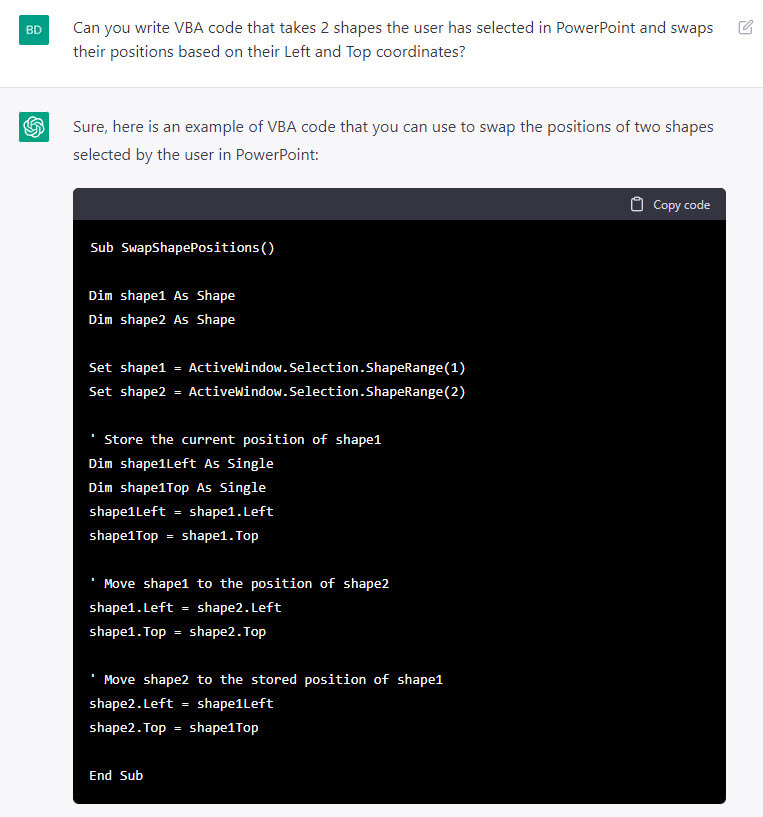

It also performed decently when I asked it to write simple functions:

This code is incorrect because it doesn’t check for various errors, such as the user not having 2 shapes selected, but it’s a decent start.

On the other hand, ChatGPT also led me down rabbit holes that went nowhere.

I won’t paste in the full dialogue and code here, but, in short: I was having some trouble with a “Copy and Paste Table Formatting” macro and asked ChatGPT for help.

It wrote code that seemed reasonable but which was based on functions that did not exist in VBA, so it solved nothing.

I pointed this out, and it kept suggesting alternatives that also didn’t work. So, it cost me time, and I eventually resolved it by writing code to copy and paste specific formats on a per-cell basis.

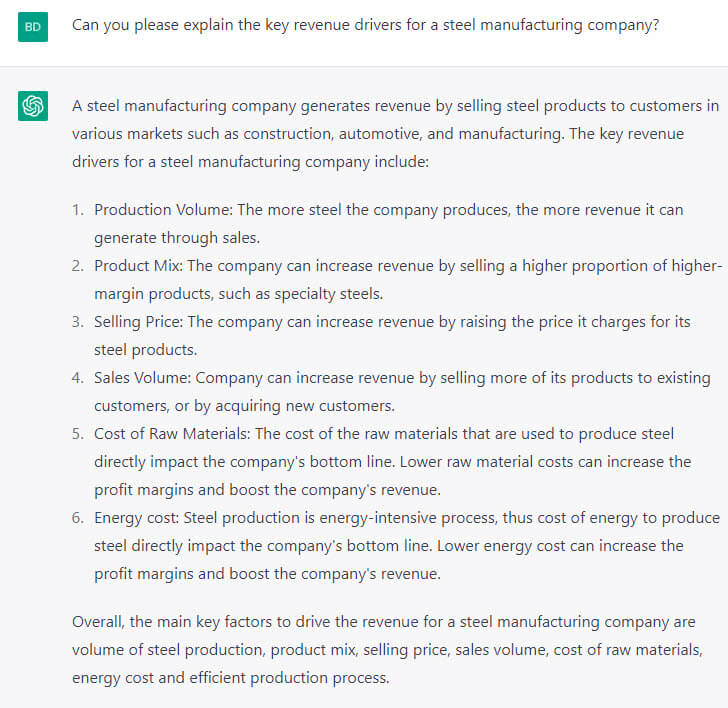

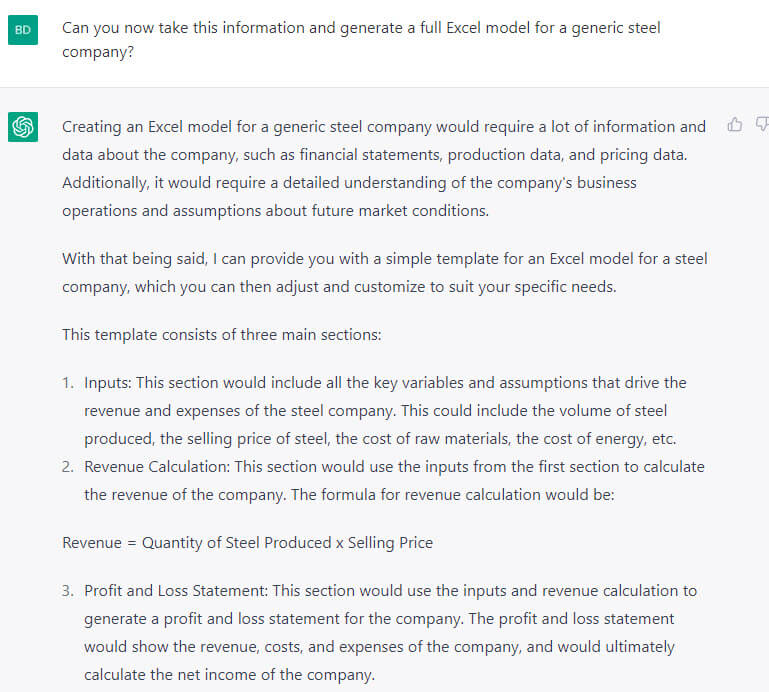

I also asked ChatGPT about the revenue drivers for a steel manufacturing company:

It’s missing a few things, such as CapEx to determine the production capacity, but it’s a decent response.

I then asked it to build a full Excel model, which it could not do, but it did offer a simple outline:

Again, it misses some critical factors, such as the relationship between CapEx, cash flow, and production capacity, but it’s decent if you have no idea where to start.

On the other hand, it cannot scan an investor presentation and 10-K and tell you what to do, and it cannot produce anything outside of text/code.

The Limitations of ChatGPT and Other AI Tools

Many people have pointed out that ChatGPT often generates coherent, impressive-sounding answers that are completely wrong.

As they’ve rightly observed, ChatGPT does not “understand” concepts in the symbolic/abstract way humans do; it simply predicts the next word in the sentence.

These are both problems, but another issue is that it cannot do the work.

In other words, even if you ask it to write code for you, it can’t insert the code into a production environment, run it, test it, find bugs, and tell you what needs to change.

It also can’t interview customers, find sources on the ground, or call a friend who knows the obscure details of a specific technical problem you’re facing.

Future versions of these tools might improve these integration issues, but I don’t think AI will be capable of “interviewing” humans in real life anytime soon.

How Will AI Change Investment Banking Recruiting?

First, it may become harder to contact people and get responses because they’ll be even more inundated with email spam generated by these tools.

Many finance professionals already get a lot of requests for informational interviews and advice, but most of these requests are poorly written and impersonal.

With ChatGPT, students will put in even less effort, but now their emails will appear coherent.

We’ve recommended email and even LinkedIn messages over cold calling, but that might change as the signal-to-noise ratio worsens.

If that happens, in-person networking via information sessions and weekend trips could become more important and effective.

Second, many methods banks have used to “automate” the recruiting process might backfire.

What’s the value of an online verbal or math test if people can type the questions into ChatGPT and get answers?

Even something like HireVue may become less useful as fake videos generated in real time become plausible.

I predict that banks and PE firms will move to more in-person, on-site tests and evaluations where Internet and phone use is disallowed.

So, expect more 60-minute LBO modeling tests and fewer “take-home” private equity case studies.

We’ll probably see the same thing happen in education, from elementary school to university.

The irony is that banks pushed to “automate” everything and make recruiting impersonal, but due to the advancement of technology itself, recruiting will become more personal.

Of course, I assume that banks care about recruiting high-quality candidates, which is debatable.

What About the Investment Banking Job Itself?

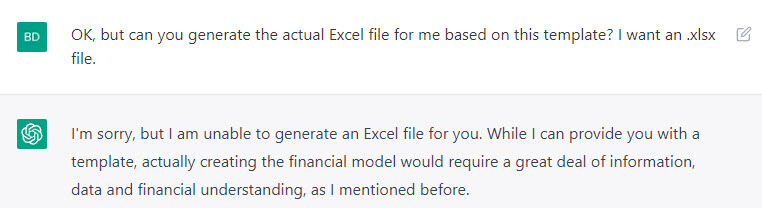

I tried to get ChatGPT to generate an actual Excel model, but it kept refusing:

But if Microsoft ends up investing $10 billion in OpenAI, you can bet that Office integration will be on its way soon.

That said, I’m not sure it matters because almost everything in banking is already based on templates and existing slides and models.

In other words, these AI tools do the same thing your VP or Associate does when outlining a presentation: combine content from other sources.

But in IB roles, most time is consumed not by the “outline” or “rough draft” but by the 78 revisions your presentation goes through as different people make changes.

And the current tools are bad at these types of refinements and small changes; it’s often faster to make the changes yourself than to explain what you want.

Even if they get to the level where they can cross-check every single detail and footnote in a 100-slide presentation, you can bet that bankers will still make humans review it manually.

In terms of IB work products, these tools will probably be the most useful for the confidential information memorandums (CIMs) and “teasers” used to market companies to potential buyers.

Most of these documents have long, boring passages that humans copy and paste from other sources.

AI tools could reduce drudgery by setting up the basic document and letting the human bankers focus on how to “sell” the company rather than finding all the boring parts.

If you’re wondering about higher-level roles in banking, such as Managing Directors, I don’t see these tools changing much anytime soon.

At the senior levels, it’s still a relationship-driven, client-facing business in which the clients pay banks millions of dollars for their full attention.

I don’t think the CEO of a $50 billion company would be satisfied if he asked for advice on an acquisition and the MD brought in a chatbot to answer his questions.

Some serious legal/regulatory/compliance issues might also prevent these tools from being widely adopted in banking.

For example, if the content in a CIM or teaser is generated based on leaked documents or random comments on Reddit, can banks be held liable if the information is wrong? Would OpenAI be subject to a lawsuit?

What would compliance departments say? And will they have to start scanning documents to find AI-generated text?

The bottom line is that AI and language models will impact IB and other finance roles, but I expect less of an immediate impact than in areas like the news, marketing/copywriting, digital art, and even routine programming.

I don’t necessarily think the technology will “kill jobs,” but it may constrain their growth.

The bigger threat for finance is an unfavorable macro environment, like the one we’re currently in.

Final Thoughts on ChatGPT, AI, and Finance Careers

I was listening to a podcast last week where the hosts predicted that ChatGPT would change the world more in the next 5 years than the Internet has in the past 30 years.

I agree this is possible, but there’s also a huge range of outcomes and, therefore, significant uncertainty.

If you work in an office doing “knowledge work,” all these tools must be on your radar ASAP.

But I don’t think you should avoid industries or careers due to AI because there’s no telling how everything will play out.

If you want to reduce your risk, aim for jobs with significant client interaction and tasks that require precision.

No job is “safe” from AI, and all industries will be affected by ChatGPT and its successors, but it’s premature to conclude that The Matrix will be the result.

On the bright side, these tools might reduce investment banking hours and let you handle those 3 AM emergency requests more easily…

…but they could also do the same thing Excel did decades ago: allow senior bankers to request more work in the same amount of time.

Either way, I’ll be eager to accept the new AI overlords if they can format Excel files more efficiently than my current macro.

—

If you liked this article, you might be interested in reading Technology Investment Banking: Take Over the World with Zero Earnings and Negative Cash Flows?

Free Exclusive Report: 57-page guide with the action plan you need to break into investment banking - how to tell your story, network, craft a winning resume, and dominate your interviews

Comments

Read below or Add a comment

This article is on point. But to be fair the effectiveness and accuracy depends alot on how you structure questions. I had similar issues when I first started using it, but with some practice, its possible to get better.

That is true, but it also depends on your work style. For example, I don’t like using these tools for long-form articles because you have to keep prompting them for changes so many times, and then you have to read each finished version completely, that it’s actually faster to write them myself. I write one draft quickly, and then do a single editing pass, and that’s it.

Overall, I’ve found ChatGPT most helpful for 1) Documenting and commenting on code; 2) Writing/assisting with code; and 3) Actual writing, in that order. But I have to admit I never do much “mechanical” writing – each article, case study, etc., usually requires a fair amount of creativity and doesn’t closely resemble previous ones. So I could see these tools being more helpful for other writers.

Do you think the Private Equity industry is more susceptible to being automated with ChatGPT in comparison to IB since PE is less client-facing?

No, not really, because PE firms also have “clients” (the Limited Partners) and many tasks related to deals and portfolio companies cannot be automated easily. The main use case of ChatGPT in all these fields is to speed up the process of writing/summarizing memos, presentations, etc., which is fine, but it’s only a small part of what you actually do on the job. Another issue is that PE firms are not going to give up all their data to OpenAI or other firms just to “become more efficient” – even services like Capital IQ can’t get the proprietary data with offers to pay a lot for it. So why would they hand it over for free to MSFT, GOOG, or OpenAI?

I think the bigger risk is that AI might disrupt many of their existing investments, make some industries less viable, and completely change cost structures.

Interesting that makes sense. Thanks for the in-depth answer.

I’m curious if your views have changed a bit in terms of automation affecting the Investment Banking industry after seeing the demo of Microsoft CoPilot. Seems like an industry disrupter.

Nope. Anyone who’s familiar with the workflow at banks and how they do things internally can tell you why this is the case: 95% of your work consists of “Client X wants us to make Small Changes A, B, and C… or Managing Director Y wants us to change Small Points D, E, and F.” You end up going back and forth on documents dozens of times as people keep requesting small tweaks. All these automation/AI tools are good for rough outlines if you have no starting point, template, or idea of what you want to write. But if you have all those, and your work consists of making tweaks the client wants to see, they’re less impactful. “Prompt engineering” is not an efficient way to make small changes in a 90-page document because you have no idea what changes each new prompt will make, and you don’t want to keep reviewing all 90 pages in each revision.

At most, bankers will say, “Aha, you can draft presentations more quickly now. I’m going to ask you to create or revise twice as many slides!” Oh, and banks are notoriously slow to adopt outside tools. Many banks won’t even let people use simple external macros with completely predictable behavior, let alone platforms where you share confidential client data with OpenAI / Microsoft.

The main risks for IB are still related to macro factors and, specifically for AI, the fact that the cost structures and dynamics of various industries may change quite a bit, reducing visibility in deal volumes/types by sector.

Thanks for the insight!

Nice article but could you make more articles on S&T whether it be culture, AMAs, covering different products, etc.

I hope to do this as soon as ChatGPT includes a sales & trading module…

(There will be more S&T and HF content on the site this year, but it takes a long time to research and write, so no promises on dates.)

Have you considered using ChatGPT to write an article like this? It might be a huge time saver.

No. It’s still pretty bad for longer/technical content, and it would take me longer to read what it produces and keep asking for rewrites than to write it myself. Also, I expect Google to start analyzing sites for human vs. machine-generated content and penalizing anything that looks even slightly artificial. And there’s already too much low-quality junk out there, so I don’t want to add even more junk to the mix. I think it might be useful for coming up with variations for email subject lines and social media / ad campaigns.